#docker image node express

Explore tagged Tumblr posts

Text

youtube

#youtube#video#codeonedigest#microservices#aws#microservice#docker#awscloud#nodejs module#nodejs#nodejs express#node js#node js training#node js express#node js development company#node js development services#app runner#aws app runner#docker image#docker container#docker tutorial#docker course

0 notes

Text

Deploying Your First Application on OpenShift

Deploying an application on OpenShift can be straightforward with the right guidance. In this tutorial, we'll walk through deploying a simple "Hello World" application on OpenShift. We'll cover creating an OpenShift project, deploying the application, and exposing it to the internet.

Prerequisites

OpenShift CLI (oc): Ensure you have the OpenShift CLI installed. You can download it from the OpenShift CLI Download page.

OpenShift Cluster: You need access to an OpenShift cluster. You can set up a local cluster using Minishift or use an online service like OpenShift Online.

Step 1: Log In to Your OpenShift Cluster

First, log in to your OpenShift cluster using the oc command.

oc login https://<your-cluster-url> --token=<your-token>

Replace <your-cluster-url> with the URL of your OpenShift cluster and <your-token> with your OpenShift token.

Step 2: Create a New Project

Create a new project to deploy your application.

oc new-project hello-world-project

Step 3: Create a Simple Hello World Application

For this tutorial, we'll use a simple Node.js application. Create a new directory for your project and initialize a new Node.js application.

mkdir hello-world-app cd hello-world-app npm init -y

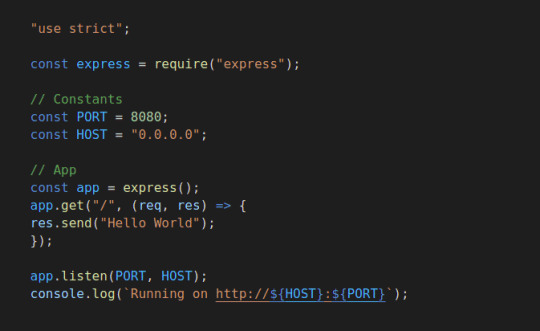

Create a file named server.js and add the following content:

const express = require('express'); const app = express(); const port = 8080; app.get('/', (req, res) => res.send('Hello World from OpenShift!')); app.listen(port, () => { console.log(`Server running at http://localhost:${port}/`); });

Install the necessary dependencies.

npm install express

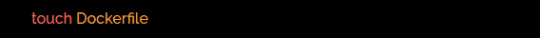

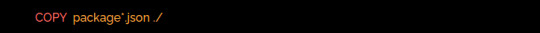

Step 4: Create a Dockerfile

Create a Dockerfile in the same directory with the following content:

FROM node:14 WORKDIR /app COPY package*.json ./ RUN npm install COPY . . EXPOSE 8080 CMD ["node", "server.js"]

Step 5: Build and Push the Docker Image

Log in to your Docker registry (e.g., Docker Hub) and push the Docker image.

docker login docker build -t <your-dockerhub-username>/hello-world-app . docker push <your-dockerhub-username>/hello-world-app

Replace <your-dockerhub-username> with your Docker Hub username.

Step 6: Deploy the Application on OpenShift

Create a new application in your OpenShift project using the Docker image.

oc new-app <your-dockerhub-username>/hello-world-app

OpenShift will automatically create the necessary deployment configuration, service, and pod for your application.

Step 7: Expose the Application

Expose your application to create a route, making it accessible from the internet.

oc expose svc/hello-world-app

Step 8: Access the Application

Get the route URL for your application.

oc get routes

Open the URL in your web browser. You should see the message "Hello World from OpenShift!".

Conclusion

Congratulations! You've successfully deployed a simple "Hello World" application on OpenShift. This tutorial covered the basic steps, from setting up your project and application to exposing it on the internet. OpenShift offers many more features for managing applications, so feel free to explore its documentation for more advanced topic

For more details click www.qcsdclabs.com

#redhatcourses#information technology#docker#container#linux#kubernetes#containersecurity#containerorchestration#dockerswarm#aws

0 notes

Text

This Week in Rust 516

Hello and welcome to another issue of This Week in Rust! Rust is a programming language empowering everyone to build reliable and efficient software. This is a weekly summary of its progress and community. Want something mentioned? Tag us at @ThisWeekInRust on Twitter or @ThisWeekinRust on mastodon.social, or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub and archives can be viewed at this-week-in-rust.org. If you find any errors in this week's issue, please submit a PR.

Updates from Rust Community

Official

Announcing Rust 1.73.0

Polonius update

Project/Tooling Updates

rust-analyzer changelog #202

Announcing: pid1 Crate for Easier Rust Docker Images - FP Complete

bit_seq in Rust: A Procedural Macro for Bit Sequence Generation

tcpproxy 0.4 released

Rune 0.13

Rust on Espressif chips - September 29 2023

esp-rs quarterly planning: Q4 2023

Implementing the #[diagnostic] namespace to improve rustc error messages in complex crates

Observations/Thoughts

Safety vs Performance. A case study of C, C++ and Rust sort implementations

Raw SQL in Rust with SQLx

Thread-per-core

Edge IoT with Rust on ESP: HTTP Client

The Ultimate Data Engineering Chadstack. Running Rust inside Apache Airflow

Why Rust doesn't need a standard div_rem: An LLVM tale - CodSpeed

Making Rust supply chain attacks harder with Cackle

[video] Rust 1.73.0: Everything Revealed in 16 Minutes

Rust Walkthroughs

Let's Build A Cargo Compatible Build Tool - Part 5

How we reduced the memory usage of our Rust extension by 4x

Calling Rust from Python

Acceptance Testing embedded-hal Drivers

5 ways to instantiate Rust structs in tests

Research

Looking for Bad Apples in Rust Dependency Trees Using GraphQL and Trustfall

Miscellaneous

Rust, Open Source, Consulting - Interview with Matthias Endler

Edge IoT with Rust on ESP: Connecting WiFi

Bare-metal Rust in Android

[audio] Learn Rust in a Month of Lunches with Dave MacLeod

[video] Rust 1.73.0: Everything Revealed in 16 Minutes

[video] Rust 1.73 Release Train

[video] Why is the JavaScript ecosystem switching to Rust?

Crate of the Week

This week's crate is yarer, a library and command-line tool to evaluate mathematical expressions.

Thanks to Gianluigi Davassi for the self-suggestion!

Please submit your suggestions and votes for next week!

Call for Participation

Always wanted to contribute to open-source projects but did not know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

Ockam - Make ockam node delete (no args) interactive by asking the user to choose from a list of nodes to delete (tuify)

Ockam - Improve ockam enroll ----help text by adding doc comment for identity flag (clap command)

Ockam - Enroll "email: '+' character not allowed"

If you are a Rust project owner and are looking for contributors, please submit tasks here.

Updates from the Rust Project

384 pull requests were merged in the last week

formally demote tier 2 MIPS targets to tier 3

add tvOS to target_os for register_dtor

linker: remove -Zgcc-ld option

linker: remove unstable legacy CLI linker flavors

non_lifetime_binders: fix ICE in lint opaque-hidden-inferred-bound

add async_fn_in_trait lint

add a note to duplicate diagnostics

always preserve DebugInfo in DeadStoreElimination

bring back generic parameters for indices in rustc_abi and make it compile on stable

coverage: allow each coverage statement to have multiple code regions

detect missing => after match guard during parsing

diagnostics: be more careful when suggesting struct fields

don't suggest nonsense suggestions for unconstrained type vars in note_source_of_type_mismatch_constraint

dont call mir.post_mono_checks in codegen

emit feature gate warning for auto traits pre-expansion

ensure that ~const trait bounds on associated functions are in const traits or impls

extend impl's def_span to include its where clauses

fix detecting references to packed unsized fields

fix fast-path for try_eval_scalar_int

fix to register analysis passes with -Zllvm-plugins at link-time

for a single impl candidate, try to unify it with error trait ref

generalize small dominators optimization

improve the suggestion of generic_bound_failure

make FnDef 1-ZST in LLVM debuginfo

more accurately point to where default return type should go

move subtyper below reveal_all and change reveal_all

only trigger refining_impl_trait lint on reachable traits

point to full async fn for future

print normalized ty

properly export function defined in test which uses global_asm!()

remove Key impls for types that involve an AllocId

remove is global hack

remove the TypedArena::alloc_from_iter specialization

show more information when multiple impls apply

suggest pin!() instead of Pin::new() when appropriate

make subtyping explicit in MIR

do not run optimizations on trivial MIR

in smir find_crates returns Vec<Crate> instead of Option<Crate>

add Span to various smir types

miri-script: print which sysroot target we are building

miri: auto-detect no_std where possible

miri: continuation of #3054: enable spurious reads in TB

miri: do not use host floats in simd_{ceil,floor,round,trunc}

miri: ensure RET assignments do not get propagated on unwinding

miri: implement llvm.x86.aesni.* intrinsics

miri: refactor dlsym: dispatch symbols via the normal shim mechanism

miri: support getentropy on macOS as a foreign item

miri: tree Borrows: do not create new tags as 'Active'

add missing inline attributes to Duration trait impls

stabilize Option::as_(mut_)slice

reuse existing Somes in Option::(x)or

fix generic bound of str::SplitInclusive's DoubleEndedIterator impl

cargo: refactor(toml): Make manifest file layout more consitent

cargo: add new package cache lock modes

cargo: add unsupported short suggestion for --out-dir flag

cargo: crates-io: add doc comment for NewCrate struct

cargo: feat: add Edition2024

cargo: prep for automating MSRV management

cargo: set and verify all MSRVs in CI

rustdoc-search: fix bug with multi-item impl trait

rustdoc: rename issue-\d+.rs tests to have meaningful names (part 2)

rustdoc: Show enum discrimant if it is a C-like variant

rustfmt: adjust span derivation for const generics

clippy: impl_trait_in_params now supports impls and traits

clippy: into_iter_without_iter: walk up deref impl chain to find iter methods

clippy: std_instead_of_core: avoid lint inside of proc-macro

clippy: avoid invoking ignored_unit_patterns in macro definition

clippy: fix items_after_test_module for non root modules, add applicable suggestion

clippy: fix ICE in redundant_locals

clippy: fix: avoid changing drop order

clippy: improve redundant_locals help message

rust-analyzer: add config option to use rust-analyzer specific target dir

rust-analyzer: add configuration for the default action of the status bar click action in VSCode

rust-analyzer: do flyimport completions by prefix search for short paths

rust-analyzer: add assist for applying De Morgan's law to Iterator::all and Iterator::any

rust-analyzer: add backtick to surrounding and auto-closing pairs

rust-analyzer: implement tuple return type to tuple struct assist

rust-analyzer: ensure rustfmt runs when configured with ./

rust-analyzer: fix path syntax produced by the into_to_qualified_from assist

rust-analyzer: recognize custom main function as binary entrypoint for runnables

Rust Compiler Performance Triage

A quiet week, with few regressions and improvements.

Triage done by @simulacrum. Revision range: 9998f4add..84d44dd

1 Regressions, 2 Improvements, 4 Mixed; 1 of them in rollups

68 artifact comparisons made in total

Full report here

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

No RFCs were approved this week.

Final Comment Period

Every week, the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

[disposition: merge] RFC: Remove implicit features in a new edition

Tracking Issues & PRs

[disposition: merge] Bump COINDUCTIVE_OVERLAP_IN_COHERENCE to deny + warn in deps

[disposition: merge] document ABI compatibility

[disposition: merge] Broaden the consequences of recursive TLS initialization

[disposition: merge] Implement BufRead for VecDeque<u8>

[disposition: merge] Tracking Issue for feature(file_set_times): FileTimes and File::set_times

[disposition: merge] impl Not, Bit{And,Or}{,Assign} for IP addresses

[disposition: close] Make RefMut Sync

[disposition: merge] Implement FusedIterator for DecodeUtf16 when the inner iterator does

[disposition: merge] Stabilize {IpAddr, Ipv6Addr}::to_canonical

[disposition: merge] rustdoc: hide #[repr(transparent)] if it isn't part of the public ABI

New and Updated RFCs

[new] Add closure-move-bindings RFC

[new] RFC: Include Future and IntoFuture in the 2024 prelude

Call for Testing

An important step for RFC implementation is for people to experiment with the implementation and give feedback, especially before stabilization. The following RFCs would benefit from user testing before moving forward:

No RFCs issued a call for testing this week.

If you are a feature implementer and would like your RFC to appear on the above list, add the new call-for-testing label to your RFC along with a comment providing testing instructions and/or guidance on which aspect(s) of the feature need testing.

Upcoming Events

Rusty Events between 2023-10-11 - 2023-11-08 🦀

Virtual

2023-10-11| Virtual (Boulder, CO, US) | Boulder Elixir and Rust

Monthly Meetup

2023-10-12 - 2023-10-13 | Virtual (Brussels, BE) | EuroRust

EuroRust 2023

2023-10-12 | Virtual (Nuremberg, DE) | Rust Nuremberg

Rust Nürnberg online

2023-10-18 | Virtual (Cardiff, UK)| Rust and C++ Cardiff

Operating System Primitives (Atomics & Locks Chapter 8)

2023-10-18 | Virtual (Vancouver, BC, CA) | Vancouver Rust

Rust Study/Hack/Hang-out

2023-10-19 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

2023-10-19 | Virtual (Stuttgart, DE) | Rust Community Stuttgart

Rust-Meetup

2023-10-24 | Virtual (Berlin, DE) | OpenTechSchool Berlin

Rust Hack and Learn | Mirror

2023-10-24 | Virtual (Washington, DC, US) | Rust DC

Month-end Rusting—Fun with 🍌 and 🔎!

2023-10-31 | Virtual (Dallas, TX, US) | Dallas Rust

Last Tuesday

2023-11-01 | Virtual (Indianapolis, IN, US) | Indy Rust

Indy.rs - with Social Distancing

Asia

2023-10-11 | Kuala Lumpur, MY | GoLang Malaysia

Rust Meetup Malaysia October 2023 | Event updates Telegram | Event group chat

2023-10-18 | Tokyo, JP | Tokyo Rust Meetup

Rust and the Age of High-Integrity Languages

Europe

2023-10-11 | Brussels, BE | BeCode Brussels Meetup

Rust on Web - EuroRust Conference

2023-10-12 - 2023-10-13 | Brussels, BE | EuroRust

EuroRust 2023

2023-10-12 | Brussels, BE | Rust Aarhus

Rust Aarhus - EuroRust Conference

2023-10-12 | Reading, UK | Reading Rust Workshop

Reading Rust Meetup at Browns

2023-10-17 | Helsinki, FI | Finland Rust-lang Group

Helsinki Rustaceans Meetup

2023-10-17 | Leipzig, DE | Rust - Modern Systems Programming in Leipzig

SIMD in Rust

2023-10-19 | Amsterdam, NL | Rust Developers Amsterdam Group

Rust Amsterdam Meetup @ Terraform

2023-10-19 | Wrocław, PL | Rust Wrocław

Rust Meetup #35

2023-09-19 | Virtual (Washington, DC, US) | Rust DC

Month-end Rusting—Fun with 🍌 and 🔎!

2023-10-25 | Dublin, IE | Rust Dublin

Biome, web development tooling with Rust

2023-10-26 | Augsburg, DE | Rust - Modern Systems Programming in Leipzig

Augsburg Rust Meetup #3

2023-10-26 | Delft, NL | Rust Nederland

Rust at TU Delft

2023-11-07 | Brussels, BE | Rust Aarhus

Rust Aarhus - Rust and Talk beginners edition

North America

2023-10-11 | Boulder, CO, US | Boulder Rust Meetup

First Meetup - Demo Day and Office Hours

2023-10-12 | Lehi, UT, US | Utah Rust

The Actor Model: Fearless Concurrency, Made Easy w/Chris Mena

2023-10-13 | Cambridge, MA, US | Boston Rust Meetup

Kendall Rust Lunch

2023-10-17 | San Francisco, CA, US | San Francisco Rust Study Group

Rust Hacking in Person

2023-10-18 | Brookline, MA, US | Boston Rust Meetup

Boston University Rust Lunch

2023-10-19 | Mountain View, CA, US | Mountain View Rust Meetup

Rust Meetup at Hacker Dojo

2023-10-19 | Nashville, TN, US | Music City Rust Developers

Rust Goes Where It Pleases Pt2 - Rust on the front end!

2023-10-19 | Seattle, WA, US | Seattle Rust User Group

Seattle Rust User Group - October Meetup

2023-10-25 | Austin, TX, US | Rust ATX

Rust Lunch - Fareground

2023-10-25 | Chicago, IL, US | Deep Dish Rust

Rust Happy Hour

Oceania

2023-10-17 | Christchurch, NZ | Christchurch Rust Meetup Group

Christchurch Rust meetup meeting

2023-10-26 | Brisbane, QLD, AU | Rust Brisbane

October Meetup

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Jobs

Please see the latest Who's Hiring thread on r/rust

Quote of the Week

The Rust mission -- let you write software that's fast and correct, productively -- has never been more alive. So next Rustconf, I plan to celebrate:

All the buffer overflows I didn't create, thanks to Rust

All the unit tests I didn't have to write, thanks to its type system

All the null checks I didn't have to write thanks to Option and Result

All the JS I didn't have to write thanks to WebAssembly

All the impossible states I didn't have to assert "This can never actually happen"

All the JSON field keys I didn't have to manually type in thanks to Serde

All the missing SQL column bugs I caught at compiletime thanks to Diesel

All the race conditions I never had to worry about thanks to the borrow checker

All the connections I can accept concurrently thanks to Tokio

All the formatting comments I didn't have to leave on PRs thanks to Rustfmt

All the performance footguns I didn't create thanks to Clippy

– Adam Chalmers in their RustConf 2023 recap

Thanks to robin for the suggestion!

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, cdmistman, ericseppanen, extrawurst, andrewpollack, U007D, kolharsam, joelmarcey, mariannegoldin, bennyvasquez.

Email list hosting is sponsored by The Rust Foundation

Discuss on r/rust

0 notes

Text

Docker: How to get a Node.js application into a Docker Container

Introduction

The goal of this article is to show you an example of dockerizing a Node js application. Where you can have a basic understanding of Docker. It will help you to set up the Node js application and docker installation.

What is Docker?

Docker is an open-source platform for developing, shipping, and running applications. Docker enables you to separate your applications from your infrastructure so you can deliver software quickly. With Docker, you can manage your infrastructure in the same ways you manage your applications. By taking advantage of Docker’s methodologies for shipping, testing, and deploying code quickly, you can significantly reduce the delay between writing code and running it in production.

Why Docker?

Developing apps today requires so much more than writing code. Multiple languages, frameworks, architectures, and discontinuous interfaces between tools for each lifecycle stage create enormous complexity. Docker simplifies and accelerates your workflow while giving developers the freedom to innovate with their choice of tools. The day which comes in every developer’s life that application is working on our system, but It’s not working on the client’s system. To prevent this type of situation, we use Docker.

How can we use Docker with Nodejs?

Before starting, I am assuming that you have a working Docker installation and a basic understanding of how a Node.js application is structured.

In the first part of this video, we will create a simple web application in Node.js, then we will build a Docker image for that application, and lastly, we will instantiate a container from that image.

Setup Nodejs Server

Run command npm init and add the required details for your project

Install express in the project using npm I express

Then, create a server.js file that defines a web app using the Express.js framework:

Now we can test the node server, and start the application using node server.js. Let’s try to hit the URL http://localhost:8080/ and check the response

In the next steps, we’ll look at how you can run this app inside a Docker container using the official Docker image. First, you’ll need to create a docker file, Where we are going to add some commands.

#Dockerfile

Create a docker file in the root directory of the project using the touch command.

Edit the docker file using any editor and write the below instructions into the docker file.

Initially, we need to pick a node image that will run on a container, and here I am using the latest stable version of the node.

Next, we need to create a directory for the application. Here we can add all the project files.

This image comes with Node.js and NPM already installed, so the next thing we need to do is install your app dependencies using the npm install. So I am going to copy the package. JSON file.

To bundle your app’s source code inside the Docker image, use the COPY instruction:

Your app binds to port 8080 so you’ll use the EXPOSE instruction to have it mapped by the docker daemon:

Lastly, we are going to run the application using the CMD, which will execute node server.js

To see the final outputs please click here

0 notes

Text

Containerize SpringBoot Node Express Apps & Deploy on Azure

Containerize SpringBoot Node Express Apps & Deploy on Azure

Learn everything about Docker & run your Springboot, NodeJS apps inside containers using Docker on Azure cloud. Following are the topics that you will learn in this course: What is the need to run applications inside the container What is Docker What is Image What is Container What is Docker hub What is Dockerfile Benefits of using Docker and running applications inside a container How to…

View On WordPress

0 notes

Text

Airflow Clickhouse

Aspect calc. Aspect ratio calculator to get aspect ratio for your images or videos (4:3, 16:9, etc.).

Airflow Clickhouse Example

Airflow-clickhouse-plugin 0.6.0 Mar 13, 2021 airflow-clickhouse-plugin - Airflow plugin to execute ClickHouse commands and queries. Baluchon 0.0.1 Dec 19, 2020 A tool for managing migrations in Clickhouse. Domination 1.2 Sep 21, 2020 Real-time application in order to dominate Humans. Intelecy-pandahouse 0.3.2 Aug 25, 2020 Pandas interface for. I investigate how fast ClickHouse 18.16.1 can query 1.1 billion taxi journeys on a 3-node, 108-core AWS EC2 cluster. Convert CSVs to ORC Faster I compare the ORC file construction times of Spark 2.4.0, Hive 2.3.4 and Presto 0.214. Rev transcription career. We and third parties use cookies or similar technologies ('Cookies') as described below to collect and process personal data, such as your IP address or browser information. The world's first data engineering coding bootcamp in Berlin. Learn sustainable data craftsmanship beyond the AI-hype. Join our school and learn how to build and maintain infrastructure that powers data products, data analytics tools, data science models, business intelligence and machine learning s.

Airflow Clickhouse Connection

Package Name AccessSummary Updated jupyterlabpublic An extensible environment for interactive and reproducible computing, based on the Jupyter Notebook and Architecture. 2021-04-22httpcorepublic The next generation HTTP client. 2021-04-22jsondiffpublic Diff JSON and JSON-like structures in Python 2021-04-22jupyter_kernel_gatewaypublic Jupyter Kernel Gateway 2021-04-22reportlabpublic Open-source engine for creating complex, data-driven PDF documents and custom vector graphics 2021-04-21pytest-asynciopublic Pytest support for asyncio 2021-04-21enamlpublic Declarative DSL for building rich user interfaces in Python 2021-04-21onigurumapublic A regular expression library. 2021-04-21cfn-lintpublic CloudFormation Linter 2021-04-21aws-c-commonpublic Core c99 package for AWS SDK for C. Includes cross-platform primitives, configuration, data structures, and error handling. 2021-04-21nginxpublic Nginx is an HTTP and reverse proxy server 2021-04-21libgcryptpublic a general purpose cryptographic library originally based on code from GnuPG. 2021-04-21google-authpublic Google authentication library for Python 2021-04-21sqlalchemy-utilspublic Various utility functions for SQLAlchemy 2021-04-21flask-apschedulerpublic Flask-APScheduler is a Flask extension which adds support for the APScheduler 2021-04-21datadogpublic The Datadog Python library 2021-04-21cattrspublic Complex custom class converters for attrs. 2021-04-21argcompletepublic Bash tab completion for argparse 2021-04-21luarockspublic LuaRocks is the package manager for Lua modulesLuaRocks is the package manager for Lua module 2021-04-21srslypublic Modern high-performance serialization utilities for Python 2021-04-19pytest-benchmarkpublic A py.test fixture for benchmarking code 2021-04-19fastavropublic Fast read/write of AVRO files 2021-04-19cataloguepublic Super lightweight function registries for your library 2021-04-19zarrpublic An implementation of chunked, compressed, N-dimensional arrays for Python. 2021-04-19python-engineiopublic Engine.IO server 2021-04-19nuitkapublic Python compiler with full language support and CPython compatibility 2021-04-19hypothesispublic A library for property based testing 2021-04-19flask-adminpublic Simple and extensible admin interface framework for Flask 2021-04-19hyperframepublic Pure-Python HTTP/2 framing 2021-04-19pythonpublic General purpose programming language 2021-04-17python-regr-testsuitepublic General purpose programming language 2021-04-17pyamgpublic Algebraic Multigrid Solvers in Python 2021-04-17luigipublic Workflow mgmgt + task scheduling + dependency resolution. 2021-04-17libpython-staticpublic General purpose programming language 2021-04-17dropboxpublic Official Dropbox API Client 2021-04-17s3fspublic Convenient Filesystem interface over S3 2021-04-17furlpublic URL manipulation made simple. 2021-04-17sympypublic Python library for symbolic mathematics 2021-04-15spyderpublic The Scientific Python Development Environment 2021-04-15sqlalchemypublic Database Abstraction Library. 2021-04-15rtreepublic R-Tree spatial index for Python GIS 2021-04-15pandaspublic High-performance, easy-to-use data structures and data analysis tools. 2021-04-15poetrypublic Python dependency management and packaging made easy 2021-04-15freetdspublic FreeTDS is a free implementation of Sybase's DB-Library, CT-Library, and ODBC libraries 2021-04-15ninjapublic A small build system with a focus on speed 2021-04-15cythonpublic The Cython compiler for writing C extensions for the Python language 2021-04-15conda-package-handlingpublic Create and extract conda packages of various formats 2021-04-15condapublic OS-agnostic, system-level binary package and environment manager. 2021-04-15colorlogpublic Log formatting with colors! 2021-04-15bitarraypublic efficient arrays of booleans -- C extension 2021-04-15

Reverse Dependencies of apache-airflow

Clickhouse Icon

Digital recorder that transcribes to text. The following projects have a declared dependency on apache-airflow:

Clickhouse Download

acryl-datahub — A CLI to work with DataHub metadata

AGLOW — AGLOW: Automated Grid-enabled LOFAR Workflows

aiflow — AI Flow, an extend operators library for airflow, which helps AI engineer to write less, reuse more, integrate easily.

aircan — no summary

airflow-add-ons — Airflow extensible opertators and sensors

airflow-aws-cost-explorer — Apache Airflow Operator exporting AWS Cost Explorer data to local file or S3

airflow-bigquerylogger — BigQuery logger handler for Airflow

airflow-bio-utils — Airflow utilities for biological sequences

airflow-cdk — Custom cdk constructs for apache airflow

airflow-clickhouse-plugin — airflow-clickhouse-plugin - Airflow plugin to execute ClickHouse commands and queries

airflow-code-editor — Apache Airflow code editor and file manager

airflow-cyberark-secrets-backend — An Airflow custom secrets backend for CyberArk CCP

airflow-dbt — Apache Airflow integration for dbt

airflow-declarative — Airflow DAGs done declaratively

airflow-diagrams — Auto-generated Diagrams from Airflow DAGs.

airflow-ditto — An airflow DAG transformation framework

airflow-django — A kit for using Django features, like its ORM, in Airflow DAGs.

airflow-docker — An opinionated implementation of exclusively using airflow DockerOperators for all Operators

airflow-dvc — DVC operator for Airflow

airflow-ecr-plugin — Airflow ECR plugin

airflow-exporter — Airflow plugin to export dag and task based metrics to Prometheus.

airflow-extended-metrics — Package to expand Airflow for custom metrics.

airflow-fs — Composable filesystem hooks and operators for Airflow.

airflow-gitlab-webhook — Apache Airflow Gitlab Webhook integration

airflow-hdinsight — HDInsight provider for Airflow

airflow-imaging-plugins — Airflow plugins to support Neuroimaging tasks.

airflow-indexima — Indexima Airflow integration

airflow-notebook — Jupyter Notebook operator for Apache Airflow.

airflow-plugin-config-storage — Inject connections into the airflow database from configuration

airflow-plugin-glue-presto-apas — An Airflow Plugin to Add a Partition As Select(APAS) on Presto that uses Glue Data Catalog as a Hive metastore.

airflow-prometheus — Modern Prometheus exporter for Airflow (based on robinhood/airflow-prometheus-exporter)

airflow-prometheus-exporter — Prometheus Exporter for Airflow Metrics

airflow-provider-fivetran — A Fivetran provider for Apache Airflow

airflow-provider-great-expectations — An Apache Airflow provider for Great Expectations

airflow-provider-hightouch — Hightouch Provider for Airflow

airflow-queue-stats — An airflow plugin for viewing queue statistics.

airflow-spark-k8s — Airflow integration for Spark On K8s

airflow-spell — Apache Airflow integration for spell.run

airflow-tm1 — A package to simplify connecting to the TM1 REST API from Apache Airflow

airflow-util-dv — no summary

airflow-waterdrop-plugin — A FastAPI Middleware of Apollo(Config Server By CtripCorp) to get server config in every request.

airflow-windmill — Drag'N'Drop Web Frontend for Building and Managing Airflow DAGs

airflowdaggenerator — Dynamically generates and validates Python Airflow DAG file based on a Jinja2 Template and a YAML configuration file to encourage code re-usability

airkupofrod — Takes a deployment in your kubernetes cluster and turns its pod template into a KubernetesPodOperator object.

airtunnel — airtunnel – tame your Airflow!

apache-airflow-backport-providers-amazon — Backport provider package apache-airflow-backport-providers-amazon for Apache Airflow

apache-airflow-backport-providers-apache-beam — Backport provider package apache-airflow-backport-providers-apache-beam for Apache Airflow

apache-airflow-backport-providers-apache-cassandra — Backport provider package apache-airflow-backport-providers-apache-cassandra for Apache Airflow

apache-airflow-backport-providers-apache-druid — Backport provider package apache-airflow-backport-providers-apache-druid for Apache Airflow

apache-airflow-backport-providers-apache-hdfs — Backport provider package apache-airflow-backport-providers-apache-hdfs for Apache Airflow

apache-airflow-backport-providers-apache-hive — Backport provider package apache-airflow-backport-providers-apache-hive for Apache Airflow

apache-airflow-backport-providers-apache-kylin — Backport provider package apache-airflow-backport-providers-apache-kylin for Apache Airflow

apache-airflow-backport-providers-apache-livy — Backport provider package apache-airflow-backport-providers-apache-livy for Apache Airflow

apache-airflow-backport-providers-apache-pig — Backport provider package apache-airflow-backport-providers-apache-pig for Apache Airflow

apache-airflow-backport-providers-apache-pinot — Backport provider package apache-airflow-backport-providers-apache-pinot for Apache Airflow

apache-airflow-backport-providers-apache-spark — Backport provider package apache-airflow-backport-providers-apache-spark for Apache Airflow

apache-airflow-backport-providers-apache-sqoop — Backport provider package apache-airflow-backport-providers-apache-sqoop for Apache Airflow

apache-airflow-backport-providers-celery — Backport provider package apache-airflow-backport-providers-celery for Apache Airflow

apache-airflow-backport-providers-cloudant — Backport provider package apache-airflow-backport-providers-cloudant for Apache Airflow

apache-airflow-backport-providers-cncf-kubernetes — Backport provider package apache-airflow-backport-providers-cncf-kubernetes for Apache Airflow

apache-airflow-backport-providers-databricks — Backport provider package apache-airflow-backport-providers-databricks for Apache Airflow

apache-airflow-backport-providers-datadog — Backport provider package apache-airflow-backport-providers-datadog for Apache Airflow

apache-airflow-backport-providers-dingding — Backport provider package apache-airflow-backport-providers-dingding for Apache Airflow

apache-airflow-backport-providers-discord — Backport provider package apache-airflow-backport-providers-discord for Apache Airflow

apache-airflow-backport-providers-docker — Backport provider package apache-airflow-backport-providers-docker for Apache Airflow

apache-airflow-backport-providers-elasticsearch — Backport provider package apache-airflow-backport-providers-elasticsearch for Apache Airflow

apache-airflow-backport-providers-email — Back-ported airflow.providers.email.* package for Airflow 1.10.*

apache-airflow-backport-providers-exasol — Backport provider package apache-airflow-backport-providers-exasol for Apache Airflow

apache-airflow-backport-providers-facebook — Backport provider package apache-airflow-backport-providers-facebook for Apache Airflow

apache-airflow-backport-providers-google — Backport provider package apache-airflow-backport-providers-google for Apache Airflow

apache-airflow-backport-providers-grpc — Backport provider package apache-airflow-backport-providers-grpc for Apache Airflow

apache-airflow-backport-providers-hashicorp — Backport provider package apache-airflow-backport-providers-hashicorp for Apache Airflow

apache-airflow-backport-providers-jdbc — Backport provider package apache-airflow-backport-providers-jdbc for Apache Airflow

apache-airflow-backport-providers-jenkins — Backport provider package apache-airflow-backport-providers-jenkins for Apache Airflow

apache-airflow-backport-providers-jira — Backport provider package apache-airflow-backport-providers-jira for Apache Airflow

apache-airflow-backport-providers-microsoft-azure — Backport provider package apache-airflow-backport-providers-microsoft-azure for Apache Airflow

apache-airflow-backport-providers-microsoft-mssql — Backport provider package apache-airflow-backport-providers-microsoft-mssql for Apache Airflow

apache-airflow-backport-providers-microsoft-winrm — Backport provider package apache-airflow-backport-providers-microsoft-winrm for Apache Airflow

apache-airflow-backport-providers-mongo — Backport provider package apache-airflow-backport-providers-mongo for Apache Airflow

apache-airflow-backport-providers-mysql — Backport provider package apache-airflow-backport-providers-mysql for Apache Airflow

apache-airflow-backport-providers-neo4j — Backport provider package apache-airflow-backport-providers-neo4j for Apache Airflow

apache-airflow-backport-providers-odbc — Backport provider package apache-airflow-backport-providers-odbc for Apache Airflow

apache-airflow-backport-providers-openfaas — Backport provider package apache-airflow-backport-providers-openfaas for Apache Airflow

apache-airflow-backport-providers-opsgenie — Backport provider package apache-airflow-backport-providers-opsgenie for Apache Airflow

apache-airflow-backport-providers-oracle — Backport provider package apache-airflow-backport-providers-oracle for Apache Airflow

apache-airflow-backport-providers-pagerduty — Backport provider package apache-airflow-backport-providers-pagerduty for Apache Airflow

apache-airflow-backport-providers-papermill — Backport provider package apache-airflow-backport-providers-papermill for Apache Airflow

apache-airflow-backport-providers-plexus — Backport provider package apache-airflow-backport-providers-plexus for Apache Airflow

apache-airflow-backport-providers-postgres — Backport provider package apache-airflow-backport-providers-postgres for Apache Airflow

apache-airflow-backport-providers-presto — Backport provider package apache-airflow-backport-providers-presto for Apache Airflow

apache-airflow-backport-providers-qubole — Backport provider package apache-airflow-backport-providers-qubole for Apache Airflow

apache-airflow-backport-providers-redis — Backport provider package apache-airflow-backport-providers-redis for Apache Airflow

apache-airflow-backport-providers-salesforce — Backport provider package apache-airflow-backport-providers-salesforce for Apache Airflow

apache-airflow-backport-providers-samba — Backport provider package apache-airflow-backport-providers-samba for Apache Airflow

apache-airflow-backport-providers-segment — Backport provider package apache-airflow-backport-providers-segment for Apache Airflow

apache-airflow-backport-providers-sendgrid — Backport provider package apache-airflow-backport-providers-sendgrid for Apache Airflow

apache-airflow-backport-providers-sftp — Backport provider package apache-airflow-backport-providers-sftp for Apache Airflow

apache-airflow-backport-providers-singularity — Backport provider package apache-airflow-backport-providers-singularity for Apache Airflow

apache-airflow-backport-providers-slack — Backport provider package apache-airflow-backport-providers-slack for Apache Airflow

apache-airflow-backport-providers-snowflake — Backport provider package apache-airflow-backport-providers-snowflake for Apache Airflow

0 notes

Text

A Gentle Introduction to Using a Docker Container as a Dev Environment

Sarcasm disclaimer: This article is mostly sarcasm. I do not think that I actually speak for Dylan Thomas and I would never encourage you to foist a light theme on people who don’t want it. No matter how wrong they may be.

When Dylan Thomas penned the words, “Do not go gentle into that good night,” he was talking about death. But if he were alive today, he might be talking about Linux containers. There is no way to know for sure because he passed away in 1953, but this is the internet, so I feel extremely confident speaking authoritatively on his behalf.

My confidence comes from a complete overestimation of my skills and intelligence coupled with the fact that I recently tried to configure a Docker container as my development environment. And I found myself raging against the dying of the light as Docker rejected every single attempt I made like I was me and it was King James screaming, “NOT IN MY HOUSE!”

Pain is an excellent teacher. And because I care about you and have no other ulterior motives, I want to use that experience to give you a “gentle” introduction to using a Docker container as a development environment. But first, let’s talk about whyyyyyyyyyyy you would ever want to do that.

kbutwhytho?

Close your eyes and picture this: a grown man dressed up like a fox.

Wait. No. Wrong scenario.

Instead, picture a project that contains not just your source code, but your entire development environment and all the dependencies and runtimes your app needs. You could then give that project to anyone anywhere (like the fox guy) and they could run your project without having to make a lick of configuration changes to their own environment.

This is exactly what Docker containers do. A Dockerfile defines an entire runtime environment with a single file. All you would need is a way to develop inside of that container.

Wait for it…

VS Code and Remote – Containers

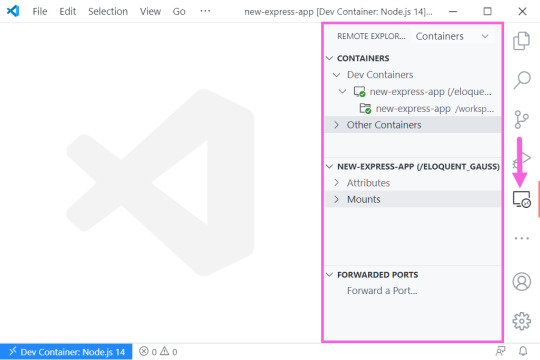

VS Code has an extension called Remote – Containers that lets you load a project inside a Docker container and connect to it with VS Code. That’s some Inception-level stuff right there. (Did he make it out?! THE TALISMAN NEVER ACTUALLY STOPS SPINNING.) It’s easier to understand if we (and by “we” I mean you) look at it in action.

Adding a container to a project

Let’s say for a moment that you are on a high-end gaming PC that you built for your kids and then decided to keep if for yourself. I mean, why exactly do they deserve a new computer again? Oh, that’s right. They don’t. They can’t even take out the trash on Sundays even though you TELL THEM EVERY WEEK.

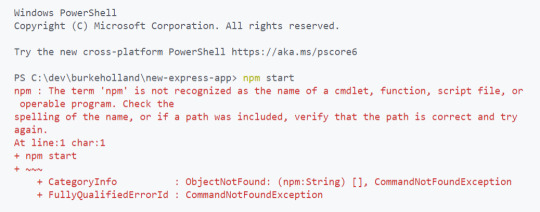

This is a fresh Windows machine with WSL2 and Docker installed, but that’s all. Were you to try and run a Node.js project on this machine, Powershell would tell you that it has absolutely no idea what you are reffering to and maybe you mispelled something. Which, in all fairness, you do suck at spelling. Remember that time in 4ᵗʰ grade when you got knocked out of the first round of the spelling bee because you couldn’t spell “fried.” FRYED? There’s no “Y” in there!

Now this is not a huge problem — you could always skip off and install Node.js. But let’s say for a second that you can’t be bothered to do that and you’re pretty sure that skipping is not something adults do.

Instead, we can configure this project to run in a container that already has Node.js installed. Now, as I’ve already discussed, I have no idea how to use Docker. I can barely use the microwave. Fortunately, VS Code will configure your project for you — to an extent.

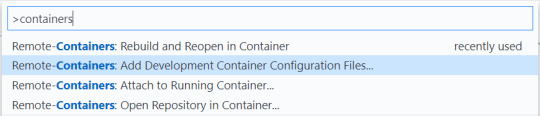

From the Command Palette, there is an “Add Development Container Configuration Files…” command. This command looks at your project and tries to add the proper container definition.

In this case, VS Code knows I’ve got a Node project here, so I’ll just pick Node.js 14. Yes, I am aware that 12 is LTS right now, but it’s gonna be 14 in [checks watch] one month and I’m an early adopter, as is evidenced by my interest in container technology just now in 2020.

This will add a .devcontainer folder with some assets inside. One is a Dockerfile that contains the Node.js image that we’re going to use, and the other is a devcontainer.json that has some project level configuration going on.

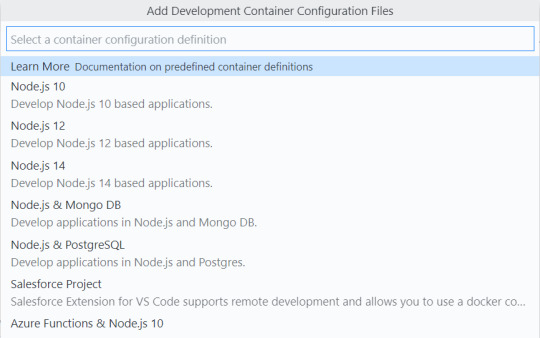

Now, before we touch anything and break it all (we’ll get to that, trust me), we can select “Rebuild and Reopen in Container” from the Command Palette. This will restart VS Code and set about building the container. Once it completes (which can take a while the first time if you’re not on a high-end gaming PC that your kids will never know the joys of), the project will open inside of the container. VS Code is connected to the container, and you know that because it says so in the lower left-hand corner.

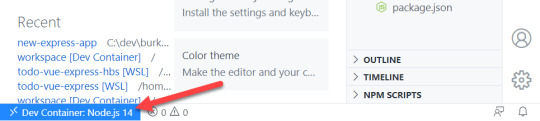

Now if we open the terminal in VS Code, Powershell is conspicously absent because we are not on Windows anymore, Dorthy. We are now in a Linux container. And we can both npm install and npm start in this magical land.

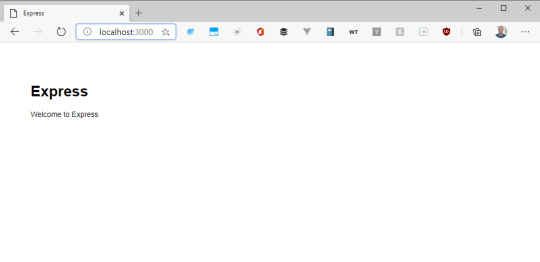

This is an Express App, so it should be running on port 3000. But if you try and visit that port, it won’t load. This is because we need to map a port in the container to 3000 on our localhost. As one does.

Fortunately, there is a UI for this.

The Remote Containers extension puts a “Remote Explorer” icon in the Action Bar. Which is on the left-hand side for you, but the right-hand side for me. Because I moved it and you should too.

There are three sections here, but look at the bottom one which says “Port Forwarding,” I’m not the sandwich with the most lettuce, but I’m pretty sure that’s what we want here. You can click on the “Forward a Port” and type “3000,” Now if we try and hit the app from the browser…

Mostly things, “just worked.” But the configuration is also quite simple. Let’s look at how we can start to customize this setup by automating some of the aspects of the project itself. Project specific configuration is done in the devcontainer.json file.

Automating project configuration

First off, we can automate the port forwarding by adding a forwardPorts variable and specifying 3000 as the value. We can also automate the npm install command by specifying the postCreateCommand property. And let’s face it, we could all stand to run AT LEAST one less npm install.

{ // ... // Use 'forwardPorts' to make a list of ports inside the container available locally. "forwardPorts": [3000], // Use 'postCreateCommand' to run commands after the container is created. "postCreateCommand": "npm install", // ... }

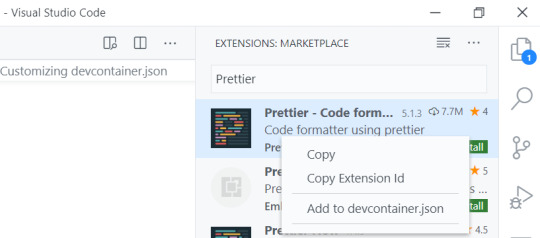

Additionally, we can include VS Code extensions. The VS Code that runs in the Docker container does not automatically get every extension you have installed. You have to install them in the container, or just include them like we’re doing here.

Extensions like Prettier and ESLint are perfect for this kind of scenario. We can also take this opportunity to foist a light theme on everyone because it turns out that dark themes are worse for reading and comprehension. I feel like a prophet.

// For format details, see https://aka.ms/vscode-remote/devcontainer.json or this file's README at: // https://github.com/microsoft/vscode-dev-containers/tree/v0.128.0/containers/javascript-node-14 { // ... // Add the IDs of extensions you want installed when the container is created. "extensions": [ "dbaeumer.vscode-eslint", "esbenp.prettier-vscode", "GitHub.github-vscode-theme" ] // ... }

If you’re wondering where to find those extension ID’s, they come up in intellisense (Ctrl/Cmd + Shift) if you have them installed. If not, search the extension marketplace, right-click the extension and say “Copy extension ID.” Or even better, just select “Add to devcontainer.json.”

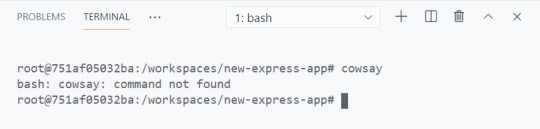

By default, the Node.js container that VS Code gives you has things like git and cURL already installed. What it doesn’t have, is “cowsay,” And we can’t have a Linux environment without cowsay. That’s in the Linux bi-laws (it’s not). I don’t make the rules. We need to customize this container to add that.

Automating environment configuration

This is where things went off the rails for me. In order to add software to a development container, you have to edit the Dockerfile. And Linux has no tolerance for your shenanigans or mistakes.

The base Docker container that you get with the container configurations in VS Code is Debian Linux. Debian Linux uses the apt-get dependency manager.

apt-get install cowsay

We can add this to the end of the Dockerfile. Whenever you install something from apt-get, run an apt-get update first. This command updates the list of packages and package repos so that you have the most current list cached. If you don’t do this, the container build will fail and tell you that it can’t find “cowsay.”

# To fully customize the contents of this image, use the following Dockerfile instead: # https://github.com/microsoft/vscode-dev-containers/tree/v0.128.0/containers/javascript-node-14/.devcontainer/Dockerfile FROM mcr.microsoft.com/vscode/devcontainers/javascript-node:0-14 # ** Install additional packages ** RUN apt-get update \ && apt-get -y install cowsay

A few things to note here…

That RUN command is a Docker thing and it creates a new “layer.” Layers are how the container knows what has changed and what in the container needs to be updated when you rebuild it. They’re kind of like cake layers except that you don’t want a lot of them because enormous cakes are awesome. Enormous containers are not. You should try and keep related logic together in the same RUN command so that you don’t create unnecessary layers.

That \ denotes a line break at the end of a line. You need it for multi-line commands. Leave it off and you will know the pain of many failed Docker builds.

The && is how you add an additional command to the RUN line. For the love of god, don’t forget that \ on the previous line.

The -y flag is important because by default, apt-get is going to prompt you to ensure you really want to install what you just tried to install. This will cause the container build to fail because there is nobody there to say Y or N. The -y flag is shorthand for “don’t bother me with your silly confirmation prompts”. Apparently everyone is supposed to know this already. I didn’t know it until about four hours ago.

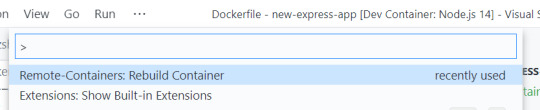

Use the command prompt to select “Rebuild Container”…

And, just like that…

It doesn’t work.

This the first lesson in what I like to call, “Linux Vertigo.” There are so many distributions of Linux and they don’t all handle things the same way. It can be difficult to figure out why things work in one place (Mac, WSL2) and don’t work in others. The reason why “cowsay” isn’t available, is that Debian puts “cowsay” in /usr/games, which is not included in the PATH environment variable.

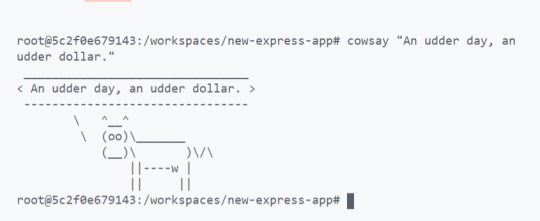

One solution would be to add it to the PATH in the Dockerfile. Like this…

FROM mcr.microsoft.com/vscode/devcontainers/javascript-node:0-14 RUN apt-get update \ && apt-get -y install cowsay ENV PATH="/usr/games:${PATH}"

EXCELLENT. We’re solving real problems here, folks. People like cow one-liners. I bullieve I herd that somewhere.

To summarize, project configuration (forwarding ports, installing project depedencies, ect) is done in the “devcontainer.json” and enviornment configuration (installing software) is done in the “Dockerfile.” Now let’s get brave and try something a little more edgy.

Advanced configuration

Let’s say for a moment that you have a gorgeous, glammed out terminal setup that you really want to put in the container as well. I mean, just because you are developing in a container doesn’t mean that your terminal has to be boring. But you also wouldn’t want to reconfigure your pretentious zsh setup for every project that you open. Can we automate that too? Let’s find out.

Fortunately, zsh is already installed in the image that you get. The only trouble is that it’s not the default shell when the container opens. There are a lot of ways that you can make zsh the default shell in a normal Docker scenario, but none of them will work here. This is because you have no control over the way the container is built.

Instead, look again to the trusty devcontainer.json file. In it, there is a "settings" block. In fact, there is a line already there showing you that the default terminal is set to "/bin/bash". Change that to "/bin/zsh".

// Set *default* container specific settings.json values on container create. "settings": { "terminal.integrated.shell.linux": "/bin/zsh" }

By the way, you can set ANY VS Code setting there. Like, you know, moving the sidebar to the right-hand side. There – I fixed it for you.

// Set default container specific settings.json values on container create. "settings": { "terminal.integrated.shell.linux": "/bin/zsh", "workbench.sideBar.location": "right" },

And how about those pretentious plugins that make you better than everyone else? For those you are going to need your .zshrc file. The container already has oh-my-zsh in it, and it’s in the “root” folder. You just need to make sure you set the path to ZSH at the top of the .zshrc so that it points to root. Like this…

# Path to your oh-my-zsh installation. export ZSH="/root/.oh-my-zsh"

# Set name of the theme to load --- if set to "random", it will # load a random theme each time oh-my-zsh is loaded, in which case, # to know which specific one was loaded, run: echo $RANDOM_THEME # See https://github.com/ohmyzsh/ohmyzsh/wiki/Themes ZSH_THEME="cloud"

# Which plugins would you like to load? plugins=(zsh-autosuggestions nvm git)

source $ZSH/oh-my-zsh.sh

Then you can copy in that sexy .zshrc file to the root folder in the Dockerfile. I put that .zshrc file in the .devcontainer folder in my project.

COPY .zshrc /root/.zshrc

And if you need to download a plugin before you install it, do that in the Dockerfile with a RUN command. Just remember to group all of these into one command since each RUN is a new layer. You are nearly a container expert now. Next step is to write a blog post about it and instruct people on the ways of Docker like you invented the thing.

RUN git clone https://github.com/zsh-users/zsh-autosuggestions ${ZSH_CUSTOM:-~/.oh-my-zsh/custom}/plugins/zsh-autosuggestions

Look at the beautiful terminal! Behold the colors! The git plugin which tells you the branch and adds a lightning emoji! Nothing says, “I know what I’m doing” like a customized terminal. I like to take mine to Starbucks and just let people see it in action and wonder if I’m a celebrity.

Go gently

Hopefully you made it to this point and thought, “Geez, this guy is seriously overreacting. This is not that hard.” If so, I have successfully saved you. You are welcome. No need to thank me. Yes, I do have an Amazon wish list.

For more information on Remote Containers, including how to do things like add a database or use Docker Compose, check out the official Remote Container docs, which provide much more clarity with 100% less neurotic commentary.

The post A Gentle Introduction to Using a Docker Container as a Dev Environment appeared first on CSS-Tricks.

You can support CSS-Tricks by being an MVP Supporter.

A Gentle Introduction to Using a Docker Container as a Dev Environment published first on https://deskbysnafu.tumblr.com/

0 notes

Text

youtube

#youtube#video#codeonedigest#microservices#microservice#nodejs tutorial#nodejs express#node js development company#node js#nodejs#node#node js training#node js express#node js development services#node js application#redis cache#redis#docker image#dockerhub#docker container#docker tutorial#docker course

0 notes

Text

This Week in Rust 516

Hello and welcome to another issue of This Week in Rust! Rust is a programming language empowering everyone to build reliable and efficient software. This is a weekly summary of its progress and community. Want something mentioned? Tag us at @ThisWeekInRust on Twitter or @ThisWeekinRust on mastodon.social, or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub and archives can be viewed at this-week-in-rust.org. If you find any errors in this week's issue, please submit a PR.

Updates from Rust Community

Official

Announcing Rust 1.73.0

Polonius update

Project/Tooling Updates

rust-analyzer changelog #202

Announcing: pid1 Crate for Easier Rust Docker Images - FP Complete

bit_seq in Rust: A Procedural Macro for Bit Sequence Generation

tcpproxy 0.4 released

Rune 0.13

Rust on Espressif chips - September 29 2023

esp-rs quarterly planning: Q4 2023

Implementing the #[diagnostic] namespace to improve rustc error messages in complex crates

Observations/Thoughts

Safety vs Performance. A case study of C, C++ and Rust sort implementations

Raw SQL in Rust with SQLx

Thread-per-core

Edge IoT with Rust on ESP: HTTP Client

The Ultimate Data Engineering Chadstack. Running Rust inside Apache Airflow

Why Rust doesn't need a standard div_rem: An LLVM tale - CodSpeed

Making Rust supply chain attacks harder with Cackle

[video] Rust 1.73.0: Everything Revealed in 16 Minutes

Rust Walkthroughs

Let's Build A Cargo Compatible Build Tool - Part 5

How we reduced the memory usage of our Rust extension by 4x

Calling Rust from Python

Acceptance Testing embedded-hal Drivers

5 ways to instantiate Rust structs in tests

Research

Looking for Bad Apples in Rust Dependency Trees Using GraphQL and Trustfall

Miscellaneous

Rust, Open Source, Consulting - Interview with Matthias Endler

Edge IoT with Rust on ESP: Connecting WiFi

Bare-metal Rust in Android

[audio] Learn Rust in a Month of Lunches with Dave MacLeod

[video] Rust 1.73.0: Everything Revealed in 16 Minutes

[video] Rust 1.73 Release Train

[video] Why is the JavaScript ecosystem switching to Rust?

Crate of the Week

This week's crate is yarer, a library and command-line tool to evaluate mathematical expressions.

Thanks to Gianluigi Davassi for the self-suggestion!

Please submit your suggestions and votes for next week!

Call for Participation

Always wanted to contribute to open-source projects but did not know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

Ockam - Make ockam node delete (no args) interactive by asking the user to choose from a list of nodes to delete (tuify)

Ockam - Improve ockam enroll ----help text by adding doc comment for identity flag (clap command)

Ockam - Enroll "email: '+' character not allowed"

If you are a Rust project owner and are looking for contributors, please submit tasks here.

Updates from the Rust Project

384 pull requests were merged in the last week

formally demote tier 2 MIPS targets to tier 3

add tvOS to target_os for register_dtor

linker: remove -Zgcc-ld option

linker: remove unstable legacy CLI linker flavors

non_lifetime_binders: fix ICE in lint opaque-hidden-inferred-bound

add async_fn_in_trait lint

add a note to duplicate diagnostics

always preserve DebugInfo in DeadStoreElimination

bring back generic parameters for indices in rustc_abi and make it compile on stable

coverage: allow each coverage statement to have multiple code regions

detect missing => after match guard during parsing

diagnostics: be more careful when suggesting struct fields

don't suggest nonsense suggestions for unconstrained type vars in note_source_of_type_mismatch_constraint

dont call mir.post_mono_checks in codegen

emit feature gate warning for auto traits pre-expansion

ensure that ~const trait bounds on associated functions are in const traits or impls

extend impl's def_span to include its where clauses

fix detecting references to packed unsized fields

fix fast-path for try_eval_scalar_int

fix to register analysis passes with -Zllvm-plugins at link-time

for a single impl candidate, try to unify it with error trait ref

generalize small dominators optimization

improve the suggestion of generic_bound_failure

make FnDef 1-ZST in LLVM debuginfo

more accurately point to where default return type should go

move subtyper below reveal_all and change reveal_all

only trigger refining_impl_trait lint on reachable traits

point to full async fn for future

print normalized ty

properly export function defined in test which uses global_asm!()

remove Key impls for types that involve an AllocId

remove is global hack

remove the TypedArena::alloc_from_iter specialization

show more information when multiple impls apply

suggest pin!() instead of Pin::new() when appropriate

make subtyping explicit in MIR

do not run optimizations on trivial MIR

in smir find_crates returns Vec<Crate> instead of Option<Crate>

add Span to various smir types

miri-script: print which sysroot target we are building

miri: auto-detect no_std where possible

miri: continuation of #3054: enable spurious reads in TB

miri: do not use host floats in simd_{ceil,floor,round,trunc}

miri: ensure RET assignments do not get propagated on unwinding

miri: implement llvm.x86.aesni.* intrinsics

miri: refactor dlsym: dispatch symbols via the normal shim mechanism

miri: support getentropy on macOS as a foreign item

miri: tree Borrows: do not create new tags as 'Active'

add missing inline attributes to Duration trait impls

stabilize Option::as_(mut_)slice

reuse existing Somes in Option::(x)or

fix generic bound of str::SplitInclusive's DoubleEndedIterator impl

cargo: refactor(toml): Make manifest file layout more consitent

cargo: add new package cache lock modes

cargo: add unsupported short suggestion for --out-dir flag

cargo: crates-io: add doc comment for NewCrate struct

cargo: feat: add Edition2024

cargo: prep for automating MSRV management

cargo: set and verify all MSRVs in CI

rustdoc-search: fix bug with multi-item impl trait

rustdoc: rename issue-\d+.rs tests to have meaningful names (part 2)

rustdoc: Show enum discrimant if it is a C-like variant

rustfmt: adjust span derivation for const generics

clippy: impl_trait_in_params now supports impls and traits

clippy: into_iter_without_iter: walk up deref impl chain to find iter methods

clippy: std_instead_of_core: avoid lint inside of proc-macro

clippy: avoid invoking ignored_unit_patterns in macro definition

clippy: fix items_after_test_module for non root modules, add applicable suggestion

clippy: fix ICE in redundant_locals

clippy: fix: avoid changing drop order

clippy: improve redundant_locals help message

rust-analyzer: add config option to use rust-analyzer specific target dir

rust-analyzer: add configuration for the default action of the status bar click action in VSCode

rust-analyzer: do flyimport completions by prefix search for short paths

rust-analyzer: add assist for applying De Morgan's law to Iterator::all and Iterator::any

rust-analyzer: add backtick to surrounding and auto-closing pairs

rust-analyzer: implement tuple return type to tuple struct assist

rust-analyzer: ensure rustfmt runs when configured with ./

rust-analyzer: fix path syntax produced by the into_to_qualified_from assist

rust-analyzer: recognize custom main function as binary entrypoint for runnables

Rust Compiler Performance Triage

A quiet week, with few regressions and improvements.

Triage done by @simulacrum. Revision range: 9998f4add..84d44dd

1 Regressions, 2 Improvements, 4 Mixed; 1 of them in rollups

68 artifact comparisons made in total

Full report here

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

No RFCs were approved this week.

Final Comment Period

Every week, the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

[disposition: merge] RFC: Remove implicit features in a new edition

Tracking Issues & PRs

[disposition: merge] Bump COINDUCTIVE_OVERLAP_IN_COHERENCE to deny + warn in deps

[disposition: merge] document ABI compatibility

[disposition: merge] Broaden the consequences of recursive TLS initialization

[disposition: merge] Implement BufRead for VecDeque<u8>

[disposition: merge] Tracking Issue for feature(file_set_times): FileTimes and File::set_times

[disposition: merge] impl Not, Bit{And,Or}{,Assign} for IP addresses

[disposition: close] Make RefMut Sync

[disposition: merge] Implement FusedIterator for DecodeUtf16 when the inner iterator does

[disposition: merge] Stabilize {IpAddr, Ipv6Addr}::to_canonical

[disposition: merge] rustdoc: hide #[repr(transparent)] if it isn't part of the public ABI

New and Updated RFCs

[new] Add closure-move-bindings RFC

[new] RFC: Include Future and IntoFuture in the 2024 prelude

Call for Testing

An important step for RFC implementation is for people to experiment with the implementation and give feedback, especially before stabilization. The following RFCs would benefit from user testing before moving forward:

No RFCs issued a call for testing this week.

If you are a feature implementer and would like your RFC to appear on the above list, add the new call-for-testing label to your RFC along with a comment providing testing instructions and/or guidance on which aspect(s) of the feature need testing.

Upcoming Events

Rusty Events between 2023-10-11 - 2023-11-08 🦀

Virtual

2023-10-11| Virtual (Boulder, CO, US) | Boulder Elixir and Rust

Monthly Meetup

2023-10-12 - 2023-10-13 | Virtual (Brussels, BE) | EuroRust

EuroRust 2023

2023-10-12 | Virtual (Nuremberg, DE) | Rust Nuremberg

Rust Nürnberg online

2023-10-18 | Virtual (Cardiff, UK)| Rust and C++ Cardiff

Operating System Primitives (Atomics & Locks Chapter 8)

2023-10-18 | Virtual (Vancouver, BC, CA) | Vancouver Rust

Rust Study/Hack/Hang-out

2023-10-19 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

2023-10-19 | Virtual (Stuttgart, DE) | Rust Community Stuttgart

Rust-Meetup

2023-10-24 | Virtual (Berlin, DE) | OpenTechSchool Berlin

Rust Hack and Learn | Mirror

2023-10-24 | Virtual (Washington, DC, US) | Rust DC

Month-end Rusting—Fun with 🍌 and 🔎!

2023-10-31 | Virtual (Dallas, TX, US) | Dallas Rust

Last Tuesday

2023-11-01 | Virtual (Indianapolis, IN, US) | Indy Rust

Indy.rs - with Social Distancing

Asia

2023-10-11 | Kuala Lumpur, MY | GoLang Malaysia

Rust Meetup Malaysia October 2023 | Event updates Telegram | Event group chat

2023-10-18 | Tokyo, JP | Tokyo Rust Meetup

Rust and the Age of High-Integrity Languages

Europe

2023-10-11 | Brussels, BE | BeCode Brussels Meetup

Rust on Web - EuroRust Conference

2023-10-12 - 2023-10-13 | Brussels, BE | EuroRust

EuroRust 2023

2023-10-12 | Brussels, BE | Rust Aarhus

Rust Aarhus - EuroRust Conference

2023-10-12 | Reading, UK | Reading Rust Workshop

Reading Rust Meetup at Browns

2023-10-17 | Helsinki, FI | Finland Rust-lang Group

Helsinki Rustaceans Meetup

2023-10-17 | Leipzig, DE | Rust - Modern Systems Programming in Leipzig

SIMD in Rust

2023-10-19 | Amsterdam, NL | Rust Developers Amsterdam Group

Rust Amsterdam Meetup @ Terraform

2023-10-19 | Wrocław, PL | Rust Wrocław

Rust Meetup #35

2023-09-19 | Virtual (Washington, DC, US) | Rust DC

Month-end Rusting—Fun with 🍌 and 🔎!

2023-10-25 | Dublin, IE | Rust Dublin

Biome, web development tooling with Rust

2023-10-26 | Augsburg, DE | Rust - Modern Systems Programming in Leipzig

Augsburg Rust Meetup #3

2023-10-26 | Delft, NL | Rust Nederland

Rust at TU Delft

2023-11-07 | Brussels, BE | Rust Aarhus

Rust Aarhus - Rust and Talk beginners edition

North America

2023-10-11 | Boulder, CO, US | Boulder Rust Meetup

First Meetup - Demo Day and Office Hours

2023-10-12 | Lehi, UT, US | Utah Rust

The Actor Model: Fearless Concurrency, Made Easy w/Chris Mena

2023-10-13 | Cambridge, MA, US | Boston Rust Meetup

Kendall Rust Lunch

2023-10-17 | San Francisco, CA, US | San Francisco Rust Study Group

Rust Hacking in Person

2023-10-18 | Brookline, MA, US | Boston Rust Meetup

Boston University Rust Lunch

2023-10-19 | Mountain View, CA, US | Mountain View Rust Meetup

Rust Meetup at Hacker Dojo

2023-10-19 | Nashville, TN, US | Music City Rust Developers

Rust Goes Where It Pleases Pt2 - Rust on the front end!

2023-10-19 | Seattle, WA, US | Seattle Rust User Group

Seattle Rust User Group - October Meetup

2023-10-25 | Austin, TX, US | Rust ATX

Rust Lunch - Fareground

2023-10-25 | Chicago, IL, US | Deep Dish Rust

Rust Happy Hour

Oceania

2023-10-17 | Christchurch, NZ | Christchurch Rust Meetup Group

Christchurch Rust meetup meeting

2023-10-26 | Brisbane, QLD, AU | Rust Brisbane

October Meetup

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Jobs

Please see the latest Who's Hiring thread on r/rust

Quote of the Week

The Rust mission -- let you write software that's fast and correct, productively -- has never been more alive. So next Rustconf, I plan to celebrate:

All the buffer overflows I didn't create, thanks to Rust

All the unit tests I didn't have to write, thanks to its type system

All the null checks I didn't have to write thanks to Option and Result

All the JS I didn't have to write thanks to WebAssembly

All the impossible states I didn't have to assert "This can never actually happen"

All the JSON field keys I didn't have to manually type in thanks to Serde

All the missing SQL column bugs I caught at compiletime thanks to Diesel

All the race conditions I never had to worry about thanks to the borrow checker

All the connections I can accept concurrently thanks to Tokio

All the formatting comments I didn't have to leave on PRs thanks to Rustfmt

All the performance footguns I didn't create thanks to Clippy

– Adam Chalmers in their RustConf 2023 recap

Thanks to robin for the suggestion!

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, cdmistman, ericseppanen, extrawurst, andrewpollack, U007D, kolharsam, joelmarcey, mariannegoldin, bennyvasquez.

Email list hosting is sponsored by The Rust Foundation

Discuss on r/rust

1 note

·

View note

Photo

Launching Your Own JavaScript Based Face Recognition Algorithm [A How-To Guide] JavaScript based face recognition with Face API and Docker.he If you just want to play with a real time face recognition algorithm without any coding, you can run the following Dockerized web app below: docker run -p 8080:8080 billyfong2007/node-face-recognition:latest This Docker command will run a Docker image from Docker Hub, and bind network port 8080 of the container to your computer. You can access the Face Recognition algorithm in your browser by going to: localhost:8080 The webpage will access your laptop’s webcam and start analysing your expressions in real time! Don’t worry, this works complete offline, you’ll be the only who can view the video stream… given your computer is not compromised. Anyway, you may start having fun making all sorts of funny faces (or sad/angry etc) at your computer like a silly person! This algorithm consumes Face APIwhich is build on top of tensorflow.js core API. You can also train this algorithm to recognise different faces. For those of you who are still reading, perhaps you’d like to know how to build your own Node application to consume Face API? Okay here we go: You can find all source files in myGitHub Repo. First you need to install NPM’s live-server to serve your HTML: npm install -g live-server Build a bare bone HTML page namedindex.html: This HTML contains a video element that we’ll use to stream your laptop’s webcam. Then create script.js with the following content: The script does a few things: Load all models from directory asynchronouslyRequest permission to gain access to your webcameOnce the video started stream, create a canvas and call Face API to draw on the canvas every 100ms. The required machine learning models can be downloaded from my GitHub Repo: Once you have index.html, script.js and the models ready, you are almost good to go. You still need include face-api.min.js in order for your app to start working: Now you’re ready! Start serving your HTML page with live-server: cd /directory-to-your-project<br>live-server This simple JavaScript projects gave me a taste of the ML, I wish I could do more https://www.instagram.com/p/B_uJe9aHJMF/?igshid=ryeozw4yh89c

0 notes

Text

Nuxt TypeScript を docker-compose で構築する

from https://qiita.com/Morero/items/7a6abd4f9402aa345107?utm_campaign=popular_items&utm_medium=feed&utm_source=popular_items

はじめに

Nuxt TypeScript でセットアップの手順は公開しているが、docker-composeを用いた構築の情報が少なかったので纏めてみた。

また、Ver.2.9以降からTypeScriptへの導入手順が変わり、個人的に少し躓いた部分もあったのでその点も踏まえて共有しようと思う。

1. 実行環境

macOS Catalina Ver.10.15.1

Docker version 19.03.4, build 9013bf5

docker-compose version 1.24.1, build 4667896b

Nuxt.js Ver.2.10.2

node.js 12.13.0-alpine

2. 前提条件

Nuxt.js のバージョンが 2.9 以上である事。

PC内に docker, docker-compose, node.js がインストールされている事。

docker , docker-compose , node.js がインストールされていない場合は下記を実行すると良い。

2.1. Docker のインストール

Docker をインストールする為に DockerHub にアクセスし、Docker.dmgをダウンロードする。 ※ DockerHubを初めて利用する場合はアカウント作成が必要である。

Docker.dmgを起動すると、Dockerのインストールが行われるので、インストール完了後に Docker を Applications にコピーする。

また、他の方法としてHomebrewと呼ばれるパッケージ管理システムを用いてインストールする事ができる。以下にインストール手順を示す。

# Homebrew をインストール $ /usr/bin/ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)" # Homebrew が正しくインストールされているかどうか確認する $ brew doctor # Docker をインストールする $ brew install docker $ brew cask install docker

docker, Homebrew のバージョンの確認は以下のコマンドを実行する事で確認できる。

# Docker $ docker --version Docker version 19.03.4, build 9013bf5

# Homebrew $ brew --version Homebrew 2.1.16 Homebrew/homebrew-core (git revision 00c2c; last commit XXXX-XX-XX) Homebrew/homebrew-cask (git revision 9e283; last commit XXXX-XX-XX)

2.2. docker-compose のインストール

docker-compose のインストールは以下のコマンドを実行すると良い。

$ curl -L https://github.com/docker/compose/releases/download/1.3.1/docker-compose-`uname -s`-`uname -m` > /usr/local/bin/docker-compose # docker-compose コマンドを実行できるように、権限を設定する。 $ chmod +x /usr/local/bin/docker-compose

補足:実行中、Permission denied エラーが表示された場合、/usr/local/bin ディレクトリが書き込み可能が許可されていない可能性がある。その際、Compose のインストールをスーパーユーザで行う必要がある。sudo -i を実行してから、上記の2つのコマンドを実行し、exitする。

3. Nuxt.js 環境構築

TypeScript に変換する前に、まず Nuxt.js のプロジェクトを作成する必要があるので作成手順に関して説明する。

3.1. ファイル構成

今回のファイル構成は以下の通りである。1つのディレクトリに異なるファイルが複数混同する事を避けるため、project ディレクトリを新規し、その中にDockerfileを作成する。

また、Nuxt.js のプロジェクト内のファイル階層も綺麗にしたかったので、srcディレクトリを新規作成し、その中に纏めている。

以下に、ファイル構成を示す。

. ├── README.md ├── docker-compose.yml └── project ├── Dockerfile ├── README.md ├── node_modules ├── nuxt.config.ts ├── package.json ├── src │ ├── assets │ ├── components │ ├── layouts │ ├── middleware │ ├── pages │ ├── plugins │ ├── static │ ├── store │ └── vue-shim.d.ts ├── tsconfig.json ├── yarn-error.log └── yarn.lock

3.2. Dockerfile

はじめに、Dockerfileを作成する。node.jsに関しては、DockerHub に��メージが公開されているのでそれを使用する。

Dockerfile

# イメージ指定 FROM node:12.13.0-alpine

# コマンド実行 RUN apk update && \ apk add git && \ npm install -g @vue/cli nuxt create-nuxt-app && \

今回はイメージの軽量化の為に、alpineを使用した。

※ alpine : Alpine Linuxと呼ばれる、BusyBoxとmuslをベースにしたLinuxディストリビューションを指す

3.3. docker-compose.yml

次に、docker-compose.ymlを作成する。

docker-compose.yml

version: '3' services: node: # Dockerfileの場所 build: context: ./ dockerfile: ./project/Dockerfile working_dir: /home/node/app/project # ホストOSとコンテナ内でソースコー��を共有する volumes: - ./:/home/node/app # コンテナ内部の3000を外部から5000でアクセスする ports: - 5000:3000 environment: - HOST=0.0.0.0

3.4. dockerイメージ作成

Dockerfile, docker-compose.ymlを作成後、以下のコマンドを実行し docker イメージを作成する。

実行後、イメージができているかどうかは以下のコマンドで確認できる。

$ docker images REPOSITORY TAG IMAGE ID CREATED SIZE docker-nuxt-typescript_node latest 7f8324973b48 3 days ago 434MB node 12.13.0-alpine 5d187500daae 3 weeks ago 80.1MB

3.5. Nuxt.jsの起動

コンテナ内でnpm、yarnコマンドを実行したい場合は下記の様に指定する。

$ docker-compose run --rm <Container Name> <Command>

以下のコマンドを実行し、Nuxt.jsを起動させセットアップを行う。

$ docker-compose run --rm nuxt npx create-nuxt-app ./project Project name (your-title) Project description (My wicked Nuxt.js project) Author name (author-name) Choose the package manager > Yarn Npm Choose UI framework (Use arrow keys) > None Ant Design Vue Bootstrap Vue Buefy Bulma Element Framevuerk iView Tachyons Tailwind CSS Vuetify.js Choose custom server framework (Use arrow keys) > None (Recommended) AdonisJs Express Fastify Feathers hapi Koa Micro # スペースで選択する事ができる。(Enterキーではないので注意) Choose Nuxt.js modules >(*) Axios ( ) Progressive Web App (PWA) Support Choose linting tools >(*) ESLint ( ) Prettier ( ) Lint staged files Choose test framework > None Jest AVA Choose rendering mode (Use arrow keys) Universal (SSR) // WEB SITE > Single Page App // WEB APPLICATION Choose development tools >(*) jsconfig.json (Recommended for VS Code)

上記の設定は、筆者がセットアップに選択したものである。設定に関しては各々で必要なものを導入すると良い。

3.6. コンテナの立ち上げ

以下のコマンドを実行し、コンテナを起動させる。

$ docker-compose run node npm run dev # OR $ docker-compose run node yarn run dev

起動後、http://localhost:5000/ にアクセスし、サンプルアプリ画面が確認できれば一旦完了である。

4. TypeScript化

最後に、Nuxt.js を TypeScript に変更する手順について説明する。

4.1. @nuxt/typescript-build, @nuxt/typescript-runtime のインストール